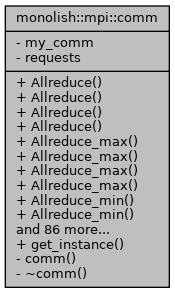

MPI class (singleton) More...

#include <monolish_mpi_core.hpp>

Public Member Functions | |

| double | Allreduce (double val) const |

| MPI_Allreduce (MPI_SUM) for scalar. Combines values from all processes and distributes the result back to all processes. More... | |

| float | Allreduce (float val) const |

| MPI_Allreduce (MPI_SUM) for scalar. Combines values from all processes and distributes the result back to all processes. More... | |

| int | Allreduce (int val) const |

| MPI_Allreduce (MPI_SUM) for scalar. Combines values from all processes and distributes the result back to all processes. More... | |

| size_t | Allreduce (size_t val) const |

| MPI_Allreduce (MPI_SUM) for scalar. Combines values from all processes and distributes the result back to all processes. More... | |

| double | Allreduce_max (double val) const |

| MPI_Allreduce (MPI_MAX) for scalar. Combines values from all processes and distributes the result back to all processes. More... | |

| float | Allreduce_max (float val) const |

| MPI_Allreduce (MPI_MAX) for scalar. Combines values from all processes and distributes the result back to all processes. More... | |

| int | Allreduce_max (int val) const |

| MPI_Allreduce (MPI_MAX) for scalar. Combines values from all processes and distributes the result back to all processes. More... | |

| size_t | Allreduce_max (size_t val) const |

| MPI_Allreduce (MPI_MAX) for scalar. Combines values from all processes and distributes the result back to all processes. More... | |

| double | Allreduce_min (double val) const |

| MPI_Allreduce (MPI_MIN) for scalar. Combines values from all processes and distributes the result back to all processes. More... | |

| float | Allreduce_min (float val) const |

| MPI_Allreduce (MPI_MIN) for scalar. Combines values from all processes and distributes the result back to all processes. More... | |

| int | Allreduce_min (int val) const |

| MPI_Allreduce (MPI_MIN) for scalar. Combines values from all processes and distributes the result back to all processes. More... | |

| size_t | Allreduce_min (size_t val) const |

| MPI_Allreduce (MPI_MIN) for scalar. Combines values from all processes and distributes the result back to all processes. More... | |

| double | Allreduce_prod (double val) const |

| MPI_Allreduce (MPI_PROD) for scalar. Combines values from all processes and distributes the result back to all processes. More... | |

| float | Allreduce_prod (float val) const |

| MPI_Allreduce (MPI_PROD) for scalar. Combines values from all processes and distributes the result back to all processes. More... | |

| int | Allreduce_prod (int val) const |

| MPI_Allreduce (MPI_PROD) for scalar. Combines values from all processes and distributes the result back to all processes. More... | |

| size_t | Allreduce_prod (size_t val) const |

| MPI_Allreduce (MPI_PROD) for scalar. Combines values from all processes and distributes the result back to all processes. More... | |

| double | Allreduce_sum (double val) const |

| MPI_Allreduce (MPI_SUM) for scalar. Combines values from all processes and distributes the result back to all processes. More... | |

| float | Allreduce_sum (float val) const |

| MPI_Allreduce (MPI_SUM) for scalar. Combines values from all processes and distributes the result back to all processes. More... | |

| int | Allreduce_sum (int val) const |

| MPI_Allreduce (MPI_SUM) for scalar. Combines values from all processes and distributes the result back to all processes. More... | |

| size_t | Allreduce_sum (size_t val) const |

| MPI_Allreduce (MPI_SUM) for scalar. Combines values from all processes and distributes the result back to all processes. More... | |

| void | Barrier () const |

| Blocks until all processes in the communicator have reached this routine. More... | |

| void | Bcast (double &val, int root) const |

| MPI_Bcast, Broadcasts a message from the process with rank root to all other processes. More... | |

| void | Bcast (float &val, int root) const |

| MPI_Bcast, Broadcasts a message from the process with rank root to all other processes. More... | |

| void | Bcast (int &val, int root) const |

| MPI_Bcast, Broadcasts a message from the process with rank root to all other processes. More... | |

| void | Bcast (monolish::vector< double > &vec, int root) const |

| MPI_Bcast, Broadcasts a message from the process with rank root to all other processes. More... | |

| void | Bcast (monolish::vector< float > &vec, int root) const |

| MPI_Bcast, Broadcasts a message from the process with rank root to all other processes. More... | |

| void | Bcast (size_t &val, int root) const |

| MPI_Bcast, Broadcasts a message from the process with rank root to all other processes. More... | |

| void | Bcast (std::vector< double > &vec, int root) const |

| MPI_Bcast, Broadcasts a message from the process with rank root to all other processes. More... | |

| void | Bcast (std::vector< float > &vec, int root) const |

| MPI_Bcast, Broadcasts a message from the process with rank root to all other processes. More... | |

| void | Bcast (std::vector< int > &vec, int root) const |

| MPI_Bcast, Broadcasts a message from the process with rank root to all other processes. More... | |

| void | Bcast (std::vector< size_t > &vec, int root) const |

| MPI_Bcast, Broadcasts a message from the process with rank root to all other processes. More... | |

| comm (comm &&)=delete | |

| comm (const comm &)=delete | |

| void | Finalize () |

| Terminates MPI execution environment. More... | |

| void | Gather (monolish::vector< double > &sendvec, monolish::vector< double > &recvvec, int root) const |

| MPI_Gather, Gathers vector from all processes The data is evenly divided and transmitted to each process. More... | |

| void | Gather (monolish::vector< float > &sendvec, monolish::vector< float > &recvvec, int root) const |

| MPI_Gather, Gathers vector from all processes The data is evenly divided and transmitted to each process. More... | |

| void | Gather (std::vector< double > &sendvec, std::vector< double > &recvvec, int root) const |

| MPI_Gather, Gathers vector from all processes The data is evenly divided and transmitted to each process. More... | |

| void | Gather (std::vector< float > &sendvec, std::vector< float > &recvvec, int root) const |

| MPI_Gather, Gathers vector from all processes The data is evenly divided and transmitted to each process. More... | |

| void | Gather (std::vector< int > &sendvec, std::vector< int > &recvvec, int root) const |

| MPI_Gather, Gathers vector from all processes The data is evenly divided and transmitted to each process. More... | |

| void | Gather (std::vector< size_t > &sendvec, std::vector< size_t > &recvvec, int root) const |

| MPI_Gather, Gathers vector from all processes The data is evenly divided and transmitted to each process. More... | |

| MPI_Comm | get_comm () const |

| get communicator More... | |

| int | get_rank () |

| get my rank number More... | |

| int | get_size () |

| get the number of processes More... | |

| void | Init () |

| Initialize the MPI execution environment. More... | |

| void | Init (int argc, char **argv) |

| Initialize the MPI execution environment. More... | |

| bool | Initialized () const |

| Indicates whether MPI_Init has been called. More... | |

| void | Irecv (double val, int src, int tag) |

| MPI_Irecv for scalar. Performs a nonblocking recv. More... | |

| void | Irecv (float val, int src, int tag) |

| MPI_Irecv for scalar. Performs a nonblocking recv. More... | |

| void | Irecv (int val, int src, int tag) |

| MPI_Irecv for scalar. Performs a nonblocking recv. More... | |

| void | Irecv (monolish::vector< double > &vec, int src, int tag) |

| MPI_Irecv for monolish::vector. Performs a nonblocking recv. More... | |

| void | Irecv (monolish::vector< float > &vec, int src, int tag) |

| MPI_Irecv for monolish::vector. Performs a nonblocking recv. More... | |

| void | Irecv (size_t val, int src, int tag) |

| MPI_Irecv for scalar. Performs a nonblocking recv. More... | |

| void | Irecv (std::vector< double > &vec, int src, int tag) |

| MPI_Irecv for std::vector. Performs a nonblocking recv. More... | |

| void | Irecv (std::vector< float > &vec, int src, int tag) |

| MPI_Irecv for std::vector. Performs a nonblocking recv. More... | |

| void | Irecv (std::vector< int > &vec, int src, int tag) |

| MPI_Irecv for std::vector. Performs a nonblocking recv. More... | |

| void | Irecv (std::vector< size_t > &vec, int src, int tag) |

| MPI_Irecv for std::vector. Performs a nonblocking recv. More... | |

| void | Isend (const monolish::vector< double > &vec, int dst, int tag) |

| MPI_Isend for monolish::vector. Performs a nonblocking send. Requests are stored internally. All requests are synchronized by Waitall(). More... | |

| void | Isend (const monolish::vector< float > &vec, int dst, int tag) |

| MPI_Isend for monolish::vector. Performs a nonblocking send. Requests are stored internally. All requests are synchronized by Waitall(). More... | |

| void | Isend (const std::vector< double > &vec, int dst, int tag) |

| MPI_Isend for std::vector. Performs a nonblocking send. Requests are stored internally. All requests are synchronized by Waitall(). More... | |

| void | Isend (const std::vector< float > &vec, int dst, int tag) |

| MPI_Isend for std::vector. Performs a nonblocking send. Requests are stored internally. All requests are synchronized by Waitall(). More... | |

| void | Isend (const std::vector< int > &vec, int dst, int tag) |

| MPI_Isend for std::vector. Performs a nonblocking send. Requests are stored internally. All requests are synchronized by Waitall(). More... | |

| void | Isend (const std::vector< size_t > &vec, int dst, int tag) |

| MPI_Isend for std::vector. Performs a nonblocking send. Requests are stored internally. All requests are synchronized by Waitall(). More... | |

| void | Isend (double val, int dst, int tag) |

| MPI_Isend for scalar. Performs a nonblocking send. Requests are stored internally. All requests are synchronized by Waitall(). More... | |

| void | Isend (float val, int dst, int tag) |

| MPI_Isend for scalar. Performs a nonblocking send. Requests are stored internally. All requests are synchronized by Waitall(). More... | |

| void | Isend (int val, int dst, int tag) |

| MPI_Isend for scalar. Performs a nonblocking send. Requests are stored internally. All requests are synchronized by Waitall(). More... | |

| void | Isend (size_t val, int dst, int tag) |

| MPI_Isend for scalar. Performs a nonblocking send. Requests are stored internally. All requests are synchronized by Waitall(). More... | |

| comm & | operator= (comm &&)=delete |

| comm & | operator= (const comm &)=delete |

| MPI_Status | Recv (double val, int src, int tag) const |

| MPI_Recv for scalar. Performs a blocking recv. More... | |

| MPI_Status | Recv (float val, int src, int tag) const |

| MPI_Recv for scalar. Performs a blocking recv. More... | |

| MPI_Status | Recv (int val, int src, int tag) const |

| MPI_Recv for scalar. Performs a blocking recv. More... | |

| MPI_Status | Recv (monolish::vector< double > &vec, int src, int tag) const |

| MPI_Recv for monolish::vector. Performs a blocking recv. More... | |

| MPI_Status | Recv (monolish::vector< float > &vec, int src, int tag) const |

| MPI_Recv for monolish::vector. Performs a blocking recv. More... | |

| MPI_Status | Recv (size_t val, int src, int tag) const |

| MPI_Recv for scalar. Performs a blocking recv. More... | |

| MPI_Status | Recv (std::vector< double > &vec, int src, int tag) const |

| MPI_Recv for std::vector. Performs a blocking recv. More... | |

| MPI_Status | Recv (std::vector< float > &vec, int src, int tag) const |

| MPI_Recv for std::vector. Performs a blocking recv. More... | |

| MPI_Status | Recv (std::vector< int > &vec, int src, int tag) const |

| MPI_Recv for std::vector. Performs a blocking recv. More... | |

| MPI_Status | Recv (std::vector< size_t > &vec, int src, int tag) const |

| MPI_Recv for std::vector. Performs a blocking recv. More... | |

| void | Scatter (monolish::vector< double > &sendvec, monolish::vector< double > &recvvec, int root) const |

| MPI_Scatter, Sends data from one task to all tasks. The data is evenly divided and transmitted to each process. More... | |

| void | Scatter (monolish::vector< float > &sendvec, monolish::vector< float > &recvvec, int root) const |

| MPI_Scatter, Sends data from one task to all tasks. The data is evenly divided and transmitted to each process. More... | |

| void | Scatter (std::vector< double > &sendvec, std::vector< double > &recvvec, int root) const |

| MPI_Scatter, Sends data from one task to all tasks. The data is evenly divided and transmitted to each process. More... | |

| void | Scatter (std::vector< float > &sendvec, std::vector< float > &recvvec, int root) const |

| MPI_Scatter, Sends data from one task to all tasks. The data is evenly divided and transmitted to each process. More... | |

| void | Scatter (std::vector< int > &sendvec, std::vector< int > &recvvec, int root) const |

| MPI_Scatter, Sends data from one task to all tasks. The data is evenly divided and transmitted to each process. More... | |

| void | Scatter (std::vector< size_t > &sendvec, std::vector< size_t > &recvvec, int root) const |

| MPI_Scatter, Sends data from one task to all tasks. The data is evenly divided and transmitted to each process. More... | |

| void | Send (double val, int dst, int tag) const |

| MPI_Send for scalar. Performs a blocking send. More... | |

| void | Send (float val, int dst, int tag) const |

| MPI_Send for scalar. Performs a blocking send. More... | |

| void | Send (int val, int dst, int tag) const |

| MPI_Send for scalar. Performs a blocking send. More... | |

| void | Send (monolish::vector< double > &vec, int dst, int tag) const |

| MPI_Send for monolish::vector. Performs a blocking send. More... | |

| void | Send (monolish::vector< float > &vec, int dst, int tag) const |

| MPI_Send for monolish::vector. Performs a blocking send. More... | |

| void | Send (size_t val, int dst, int tag) const |

| MPI_Send for scalar. Performs a blocking send. More... | |

| void | Send (std::vector< double > &vec, int dst, int tag) const |

| MPI_Send for std::vector. Performs a blocking send. More... | |

| void | Send (std::vector< float > &vec, int dst, int tag) const |

| MPI_Send for std::vector. Performs a blocking send. More... | |

| void | Send (std::vector< int > &vec, int dst, int tag) const |

| MPI_Send for std::vector. Performs a blocking send. More... | |

| void | Send (std::vector< size_t > &vec, int dst, int tag) const |

| MPI_Send for std::vector. Performs a blocking send. More... | |

| void | set_comm (MPI_Comm external_comm) |

| set communicator More... | |

| void | Waitall () |

| Waits for all communications to complete. More... | |

Static Public Member Functions | |

| static comm & | get_instance () |

Private Member Functions | |

| comm () | |

| ~comm () | |

Private Attributes | |

| MPI_Comm | my_comm = 0 |

| MPI communicator, MPI_COMM_WORLD. More... | |

| std::vector< MPI_Request > | requests |

Detailed Description

MPI class (singleton)

Definition at line 22 of file monolish_mpi_core.hpp.

Constructor & Destructor Documentation

◆ comm() [1/3]

|

inlineprivate |

Definition at line 28 of file monolish_mpi_core.hpp.

◆ ~comm()

|

inlineprivate |

Definition at line 29 of file monolish_mpi_core.hpp.

◆ comm() [2/3]

|

delete |

◆ comm() [3/3]

|

delete |

Member Function Documentation

◆ Allreduce() [1/4]

| double monolish::mpi::comm::Allreduce | ( | double | val | ) | const |

MPI_Allreduce (MPI_SUM) for scalar. Combines values from all processes and distributes the result back to all processes.

- Parameters

-

val scalar value

◆ Allreduce() [2/4]

| float monolish::mpi::comm::Allreduce | ( | float | val | ) | const |

MPI_Allreduce (MPI_SUM) for scalar. Combines values from all processes and distributes the result back to all processes.

- Parameters

-

val scalar value

◆ Allreduce() [3/4]

| int monolish::mpi::comm::Allreduce | ( | int | val | ) | const |

MPI_Allreduce (MPI_SUM) for scalar. Combines values from all processes and distributes the result back to all processes.

- Parameters

-

val scalar value

◆ Allreduce() [4/4]

| size_t monolish::mpi::comm::Allreduce | ( | size_t | val | ) | const |

MPI_Allreduce (MPI_SUM) for scalar. Combines values from all processes and distributes the result back to all processes.

- Parameters

-

val scalar value

◆ Allreduce_max() [1/4]

| double monolish::mpi::comm::Allreduce_max | ( | double | val | ) | const |

MPI_Allreduce (MPI_MAX) for scalar. Combines values from all processes and distributes the result back to all processes.

- Parameters

-

val scalar value

◆ Allreduce_max() [2/4]

| float monolish::mpi::comm::Allreduce_max | ( | float | val | ) | const |

MPI_Allreduce (MPI_MAX) for scalar. Combines values from all processes and distributes the result back to all processes.

- Parameters

-

val scalar value

◆ Allreduce_max() [3/4]

| int monolish::mpi::comm::Allreduce_max | ( | int | val | ) | const |

MPI_Allreduce (MPI_MAX) for scalar. Combines values from all processes and distributes the result back to all processes.

- Parameters

-

val scalar value

◆ Allreduce_max() [4/4]

| size_t monolish::mpi::comm::Allreduce_max | ( | size_t | val | ) | const |

MPI_Allreduce (MPI_MAX) for scalar. Combines values from all processes and distributes the result back to all processes.

- Parameters

-

val scalar value

◆ Allreduce_min() [1/4]

| double monolish::mpi::comm::Allreduce_min | ( | double | val | ) | const |

MPI_Allreduce (MPI_MIN) for scalar. Combines values from all processes and distributes the result back to all processes.

- Parameters

-

val scalar value

◆ Allreduce_min() [2/4]

| float monolish::mpi::comm::Allreduce_min | ( | float | val | ) | const |

MPI_Allreduce (MPI_MIN) for scalar. Combines values from all processes and distributes the result back to all processes.

- Parameters

-

val scalar value

◆ Allreduce_min() [3/4]

| int monolish::mpi::comm::Allreduce_min | ( | int | val | ) | const |

MPI_Allreduce (MPI_MIN) for scalar. Combines values from all processes and distributes the result back to all processes.

- Parameters

-

val scalar value

◆ Allreduce_min() [4/4]

| size_t monolish::mpi::comm::Allreduce_min | ( | size_t | val | ) | const |

MPI_Allreduce (MPI_MIN) for scalar. Combines values from all processes and distributes the result back to all processes.

- Parameters

-

val scalar value

◆ Allreduce_prod() [1/4]

| double monolish::mpi::comm::Allreduce_prod | ( | double | val | ) | const |

MPI_Allreduce (MPI_PROD) for scalar. Combines values from all processes and distributes the result back to all processes.

- Parameters

-

val scalar value

◆ Allreduce_prod() [2/4]

| float monolish::mpi::comm::Allreduce_prod | ( | float | val | ) | const |

MPI_Allreduce (MPI_PROD) for scalar. Combines values from all processes and distributes the result back to all processes.

- Parameters

-

val scalar value

◆ Allreduce_prod() [3/4]

| int monolish::mpi::comm::Allreduce_prod | ( | int | val | ) | const |

MPI_Allreduce (MPI_PROD) for scalar. Combines values from all processes and distributes the result back to all processes.

- Parameters

-

val scalar value

◆ Allreduce_prod() [4/4]

| size_t monolish::mpi::comm::Allreduce_prod | ( | size_t | val | ) | const |

MPI_Allreduce (MPI_PROD) for scalar. Combines values from all processes and distributes the result back to all processes.

- Parameters

-

val scalar value

◆ Allreduce_sum() [1/4]

| double monolish::mpi::comm::Allreduce_sum | ( | double | val | ) | const |

MPI_Allreduce (MPI_SUM) for scalar. Combines values from all processes and distributes the result back to all processes.

- Parameters

-

val scalar value

◆ Allreduce_sum() [2/4]

| float monolish::mpi::comm::Allreduce_sum | ( | float | val | ) | const |

MPI_Allreduce (MPI_SUM) for scalar. Combines values from all processes and distributes the result back to all processes.

- Parameters

-

val scalar value

◆ Allreduce_sum() [3/4]

| int monolish::mpi::comm::Allreduce_sum | ( | int | val | ) | const |

MPI_Allreduce (MPI_SUM) for scalar. Combines values from all processes and distributes the result back to all processes.

- Parameters

-

val scalar value

◆ Allreduce_sum() [4/4]

| size_t monolish::mpi::comm::Allreduce_sum | ( | size_t | val | ) | const |

MPI_Allreduce (MPI_SUM) for scalar. Combines values from all processes and distributes the result back to all processes.

- Parameters

-

val scalar value

◆ Barrier()

| void monolish::mpi::comm::Barrier | ( | ) | const |

Blocks until all processes in the communicator have reached this routine.

◆ Bcast() [1/10]

| void monolish::mpi::comm::Bcast | ( | double & | val, |

| int | root | ||

| ) | const |

MPI_Bcast, Broadcasts a message from the process with rank root to all other processes.

- Parameters

-

val scalar value root root rank number

◆ Bcast() [2/10]

| void monolish::mpi::comm::Bcast | ( | float & | val, |

| int | root | ||

| ) | const |

MPI_Bcast, Broadcasts a message from the process with rank root to all other processes.

- Parameters

-

val scalar value root root rank number

◆ Bcast() [3/10]

| void monolish::mpi::comm::Bcast | ( | int & | val, |

| int | root | ||

| ) | const |

MPI_Bcast, Broadcasts a message from the process with rank root to all other processes.

- Parameters

-

val scalar value root root rank number

◆ Bcast() [4/10]

| void monolish::mpi::comm::Bcast | ( | monolish::vector< double > & | vec, |

| int | root | ||

| ) | const |

MPI_Bcast, Broadcasts a message from the process with rank root to all other processes.

- Parameters

-

vec monolish vector (size N) root root rank number

◆ Bcast() [5/10]

| void monolish::mpi::comm::Bcast | ( | monolish::vector< float > & | vec, |

| int | root | ||

| ) | const |

MPI_Bcast, Broadcasts a message from the process with rank root to all other processes.

- Parameters

-

vec monolish vector (size N) root root rank number

◆ Bcast() [6/10]

| void monolish::mpi::comm::Bcast | ( | size_t & | val, |

| int | root | ||

| ) | const |

MPI_Bcast, Broadcasts a message from the process with rank root to all other processes.

- Parameters

-

val scalar value root root rank number

◆ Bcast() [7/10]

| void monolish::mpi::comm::Bcast | ( | std::vector< double > & | vec, |

| int | root | ||

| ) | const |

MPI_Bcast, Broadcasts a message from the process with rank root to all other processes.

- Parameters

-

vec std::vector (size N) root root rank number

◆ Bcast() [8/10]

| void monolish::mpi::comm::Bcast | ( | std::vector< float > & | vec, |

| int | root | ||

| ) | const |

MPI_Bcast, Broadcasts a message from the process with rank root to all other processes.

- Parameters

-

vec std::vector (size N) root root rank number

◆ Bcast() [9/10]

| void monolish::mpi::comm::Bcast | ( | std::vector< int > & | vec, |

| int | root | ||

| ) | const |

MPI_Bcast, Broadcasts a message from the process with rank root to all other processes.

- Parameters

-

vec std::vector (size N) root root rank number

◆ Bcast() [10/10]

| void monolish::mpi::comm::Bcast | ( | std::vector< size_t > & | vec, |

| int | root | ||

| ) | const |

MPI_Bcast, Broadcasts a message from the process with rank root to all other processes.

- Parameters

-

vec std::vector (size N) root root rank number

◆ Finalize()

| void monolish::mpi::comm::Finalize | ( | ) |

Terminates MPI execution environment.

◆ Gather() [1/6]

| void monolish::mpi::comm::Gather | ( | monolish::vector< double > & | sendvec, |

| monolish::vector< double > & | recvvec, | ||

| int | root | ||

| ) | const |

MPI_Gather, Gathers vector from all processes The data is evenly divided and transmitted to each process.

- Parameters

-

sendvec send data, monolish vector (size N) recvvec recv data, monolish vector (size N * # of procs) root root rank number

- Warning

- MPI functions do not support GPUs. The user needs to send and receive data to and from the GPU before and after the MPI function.

◆ Gather() [2/6]

| void monolish::mpi::comm::Gather | ( | monolish::vector< float > & | sendvec, |

| monolish::vector< float > & | recvvec, | ||

| int | root | ||

| ) | const |

MPI_Gather, Gathers vector from all processes The data is evenly divided and transmitted to each process.

- Parameters

-

sendvec send data, monolish vector (size N) recvvec recv data, monolish vector (size N * # of procs) root root rank number

- Warning

- MPI functions do not support GPUs. The user needs to send and receive data to and from the GPU before and after the MPI function.

◆ Gather() [3/6]

| void monolish::mpi::comm::Gather | ( | std::vector< double > & | sendvec, |

| std::vector< double > & | recvvec, | ||

| int | root | ||

| ) | const |

MPI_Gather, Gathers vector from all processes The data is evenly divided and transmitted to each process.

- Parameters

-

sendvec send data, std vector (size N) recvvec recv data, std vector (size N * # of procs) root root rank number

◆ Gather() [4/6]

| void monolish::mpi::comm::Gather | ( | std::vector< float > & | sendvec, |

| std::vector< float > & | recvvec, | ||

| int | root | ||

| ) | const |

MPI_Gather, Gathers vector from all processes The data is evenly divided and transmitted to each process.

- Parameters

-

sendvec send data, std vector (size N) recvvec recv data, std vector (size N * # of procs) root root rank number

◆ Gather() [5/6]

| void monolish::mpi::comm::Gather | ( | std::vector< int > & | sendvec, |

| std::vector< int > & | recvvec, | ||

| int | root | ||

| ) | const |

MPI_Gather, Gathers vector from all processes The data is evenly divided and transmitted to each process.

- Parameters

-

sendvec send data, std vector (size N) recvvec recv data, std vector (size N * # of procs) root root rank number

◆ Gather() [6/6]

| void monolish::mpi::comm::Gather | ( | std::vector< size_t > & | sendvec, |

| std::vector< size_t > & | recvvec, | ||

| int | root | ||

| ) | const |

MPI_Gather, Gathers vector from all processes The data is evenly divided and transmitted to each process.

- Parameters

-

sendvec send data, std vector (size N) recvvec recv data, std vector (size N * # of procs) root root rank number

◆ get_comm()

|

inline |

◆ get_instance()

|

inlinestatic |

Definition at line 39 of file monolish_mpi_core.hpp.

◆ get_rank()

| int monolish::mpi::comm::get_rank | ( | ) |

get my rank number

- Returns

- rank number

◆ get_size()

| int monolish::mpi::comm::get_size | ( | ) |

get the number of processes

- Returns

- the number of prodessed

◆ Init() [1/2]

| void monolish::mpi::comm::Init | ( | ) |

Initialize the MPI execution environment.

◆ Init() [2/2]

| void monolish::mpi::comm::Init | ( | int | argc, |

| char ** | argv | ||

| ) |

Initialize the MPI execution environment.

- Parameters

-

argc Pointer to the number of arguments argv Pointer to the argument vector

◆ Initialized()

| bool monolish::mpi::comm::Initialized | ( | ) | const |

Indicates whether MPI_Init has been called.

- Returns

- true: initialized, false: not initialized

◆ Irecv() [1/10]

| void monolish::mpi::comm::Irecv | ( | double | val, |

| int | src, | ||

| int | tag | ||

| ) |

MPI_Irecv for scalar. Performs a nonblocking recv.

- Parameters

-

val scalar value src rank of source tag message tag

- Note

- There is not MPI_Wait() in monolish::mpi, all communication is synchronized by using Waitall() function.

- Warning

- This function is not thread-safe.

◆ Irecv() [2/10]

| void monolish::mpi::comm::Irecv | ( | float | val, |

| int | src, | ||

| int | tag | ||

| ) |

MPI_Irecv for scalar. Performs a nonblocking recv.

- Parameters

-

val scalar value src rank of source tag message tag

- Note

- There is not MPI_Wait() in monolish::mpi, all communication is synchronized by using Waitall() function.

- Warning

- This function is not thread-safe.

◆ Irecv() [3/10]

| void monolish::mpi::comm::Irecv | ( | int | val, |

| int | src, | ||

| int | tag | ||

| ) |

MPI_Irecv for scalar. Performs a nonblocking recv.

- Parameters

-

val scalar value src rank of source tag message tag

- Note

- There is not MPI_Wait() in monolish::mpi, all communication is synchronized by using Waitall() function.

- Warning

- This function is not thread-safe.

◆ Irecv() [4/10]

| void monolish::mpi::comm::Irecv | ( | monolish::vector< double > & | vec, |

| int | src, | ||

| int | tag | ||

| ) |

MPI_Irecv for monolish::vector. Performs a nonblocking recv.

- Parameters

-

vec monolish::vector (size N) src rank of source tag message tag

- Note

- There is not MPI_Wait() in monolish::mpi, all communication is synchronized by using Waitall() function.

- Warning

- MPI functions do not support GPUs. The user needs to send and receive data to and from the GPU before and after the MPI function. This function is not thread-safe.

◆ Irecv() [5/10]

| void monolish::mpi::comm::Irecv | ( | monolish::vector< float > & | vec, |

| int | src, | ||

| int | tag | ||

| ) |

MPI_Irecv for monolish::vector. Performs a nonblocking recv.

- Parameters

-

vec monolish::vector (size N) src rank of source tag message tag

- Note

- There is not MPI_Wait() in monolish::mpi, all communication is synchronized by using Waitall() function.

- Warning

- MPI functions do not support GPUs. The user needs to send and receive data to and from the GPU before and after the MPI function. This function is not thread-safe.

◆ Irecv() [6/10]

| void monolish::mpi::comm::Irecv | ( | size_t | val, |

| int | src, | ||

| int | tag | ||

| ) |

MPI_Irecv for scalar. Performs a nonblocking recv.

- Parameters

-

val scalar value src rank of source tag message tag

- Note

- There is not MPI_Wait() in monolish::mpi, all communication is synchronized by using Waitall() function.

- Warning

- This function is not thread-safe.

◆ Irecv() [7/10]

| void monolish::mpi::comm::Irecv | ( | std::vector< double > & | vec, |

| int | src, | ||

| int | tag | ||

| ) |

MPI_Irecv for std::vector. Performs a nonblocking recv.

- Parameters

-

vec std::vector (size N) src rank of source tag message tag

- Note

- There is not MPI_Wait() in monolish::mpi, all communication is synchronized by using Waitall() function.

- Warning

- This function is not thread-safe.

◆ Irecv() [8/10]

| void monolish::mpi::comm::Irecv | ( | std::vector< float > & | vec, |

| int | src, | ||

| int | tag | ||

| ) |

MPI_Irecv for std::vector. Performs a nonblocking recv.

- Parameters

-

vec std::vector (size N) src rank of source tag message tag

- Note

- There is not MPI_Wait() in monolish::mpi, all communication is synchronized by using Waitall() function.

- Warning

- This function is not thread-safe.

◆ Irecv() [9/10]

| void monolish::mpi::comm::Irecv | ( | std::vector< int > & | vec, |

| int | src, | ||

| int | tag | ||

| ) |

MPI_Irecv for std::vector. Performs a nonblocking recv.

- Parameters

-

vec std::vector (size N) src rank of source tag message tag

- Note

- There is not MPI_Wait() in monolish::mpi, all communication is synchronized by using Waitall() function.

- Warning

- This function is not thread-safe.

◆ Irecv() [10/10]

| void monolish::mpi::comm::Irecv | ( | std::vector< size_t > & | vec, |

| int | src, | ||

| int | tag | ||

| ) |

MPI_Irecv for std::vector. Performs a nonblocking recv.

- Parameters

-

vec std::vector (size N) src rank of source tag message tag

- Note

- There is not MPI_Wait() in monolish::mpi, all communication is synchronized by using Waitall() function.

- Warning

- This function is not thread-safe.

◆ Isend() [1/10]

| void monolish::mpi::comm::Isend | ( | const monolish::vector< double > & | vec, |

| int | dst, | ||

| int | tag | ||

| ) |

MPI_Isend for monolish::vector. Performs a nonblocking send. Requests are stored internally. All requests are synchronized by Waitall().

- Parameters

-

vec std::vector (size N) dst rank of destination tag message tag

- Note

- There is not MPI_Wait() in monolish::mpi, all communication is synchronized by using Waitall() function.

- Warning

- MPI functions do not support GPUs. The user needs to send and receive data to and from the GPU before and after the MPI function. This function is not thread-safe.

◆ Isend() [2/10]

| void monolish::mpi::comm::Isend | ( | const monolish::vector< float > & | vec, |

| int | dst, | ||

| int | tag | ||

| ) |

MPI_Isend for monolish::vector. Performs a nonblocking send. Requests are stored internally. All requests are synchronized by Waitall().

- Parameters

-

vec std::vector (size N) dst rank of destination tag message tag

- Note

- There is not MPI_Wait() in monolish::mpi, all communication is synchronized by using Waitall() function.

- Warning

- MPI functions do not support GPUs. The user needs to send and receive data to and from the GPU before and after the MPI function. This function is not thread-safe.

◆ Isend() [3/10]

| void monolish::mpi::comm::Isend | ( | const std::vector< double > & | vec, |

| int | dst, | ||

| int | tag | ||

| ) |

MPI_Isend for std::vector. Performs a nonblocking send. Requests are stored internally. All requests are synchronized by Waitall().

- Parameters

-

vec std::vector (size N) dst rank of destination tag message tag

- Note

- There is not MPI_Wait() in monolish::mpi, all communication is synchronized by using Waitall() function.

- Warning

- This function is not thread-safe.

◆ Isend() [4/10]

| void monolish::mpi::comm::Isend | ( | const std::vector< float > & | vec, |

| int | dst, | ||

| int | tag | ||

| ) |

MPI_Isend for std::vector. Performs a nonblocking send. Requests are stored internally. All requests are synchronized by Waitall().

- Parameters

-

vec std::vector (size N) dst rank of destination tag message tag

- Note

- There is not MPI_Wait() in monolish::mpi, all communication is synchronized by using Waitall() function.

- Warning

- This function is not thread-safe.

◆ Isend() [5/10]

| void monolish::mpi::comm::Isend | ( | const std::vector< int > & | vec, |

| int | dst, | ||

| int | tag | ||

| ) |

MPI_Isend for std::vector. Performs a nonblocking send. Requests are stored internally. All requests are synchronized by Waitall().

- Parameters

-

vec std::vector (size N) dst rank of destination tag message tag

- Note

- There is not MPI_Wait() in monolish::mpi, all communication is synchronized by using Waitall() function.

- Warning

- This function is not thread-safe.

◆ Isend() [6/10]

| void monolish::mpi::comm::Isend | ( | const std::vector< size_t > & | vec, |

| int | dst, | ||

| int | tag | ||

| ) |

MPI_Isend for std::vector. Performs a nonblocking send. Requests are stored internally. All requests are synchronized by Waitall().

- Parameters

-

vec std::vector (size N) dst rank of destination tag message tag

- Note

- There is not MPI_Wait() in monolish::mpi, all communication is synchronized by using Waitall() function.

- Warning

- This function is not thread-safe.

◆ Isend() [7/10]

| void monolish::mpi::comm::Isend | ( | double | val, |

| int | dst, | ||

| int | tag | ||

| ) |

MPI_Isend for scalar. Performs a nonblocking send. Requests are stored internally. All requests are synchronized by Waitall().

- Parameters

-

val scalar value dst rank of destination tag message tag

- Note

- There is not MPI_Wait() in monolish::mpi, all communication is synchronized by using Waitall() function.

- Warning

- This function is not thread-safe.

◆ Isend() [8/10]

| void monolish::mpi::comm::Isend | ( | float | val, |

| int | dst, | ||

| int | tag | ||

| ) |

MPI_Isend for scalar. Performs a nonblocking send. Requests are stored internally. All requests are synchronized by Waitall().

- Parameters

-

val scalar value dst rank of destination tag message tag

- Note

- There is not MPI_Wait() in monolish::mpi, all communication is synchronized by using Waitall() function.

- Warning

- This function is not thread-safe.

◆ Isend() [9/10]

| void monolish::mpi::comm::Isend | ( | int | val, |

| int | dst, | ||

| int | tag | ||

| ) |

MPI_Isend for scalar. Performs a nonblocking send. Requests are stored internally. All requests are synchronized by Waitall().

- Parameters

-

val scalar value dst rank of destination tag message tag

- Note

- There is not MPI_Wait() in monolish::mpi, all communication is synchronized by using Waitall() function.

- Warning

- This function is not thread-safe.

◆ Isend() [10/10]

| void monolish::mpi::comm::Isend | ( | size_t | val, |

| int | dst, | ||

| int | tag | ||

| ) |

MPI_Isend for scalar. Performs a nonblocking send. Requests are stored internally. All requests are synchronized by Waitall().

- Parameters

-

val scalar value dst rank of destination tag message tag

- Note

- There is not MPI_Wait() in monolish::mpi, all communication is synchronized by using Waitall() function.

- Warning

- This function is not thread-safe.

◆ operator=() [1/2]

◆ operator=() [2/2]

◆ Recv() [1/10]

| MPI_Status monolish::mpi::comm::Recv | ( | double | val, |

| int | src, | ||

| int | tag | ||

| ) | const |

MPI_Recv for scalar. Performs a blocking recv.

- Parameters

-

val scalar value src rank of source tag message tag

- Returns

- MPI status object

◆ Recv() [2/10]

| MPI_Status monolish::mpi::comm::Recv | ( | float | val, |

| int | src, | ||

| int | tag | ||

| ) | const |

MPI_Recv for scalar. Performs a blocking recv.

- Parameters

-

val scalar value src rank of source tag message tag

- Returns

- MPI status object

◆ Recv() [3/10]

| MPI_Status monolish::mpi::comm::Recv | ( | int | val, |

| int | src, | ||

| int | tag | ||

| ) | const |

MPI_Recv for scalar. Performs a blocking recv.

- Parameters

-

val scalar value src rank of source tag message tag

- Returns

- MPI status object

◆ Recv() [4/10]

| MPI_Status monolish::mpi::comm::Recv | ( | monolish::vector< double > & | vec, |

| int | src, | ||

| int | tag | ||

| ) | const |

MPI_Recv for monolish::vector. Performs a blocking recv.

- Parameters

-

vec monolish::vector (size N) src rank of source tag message tag

- Returns

- MPI status object

- Warning

- MPI functions do not support GPUs. The user needs to send and receive data to and from the GPU before and after the MPI function.

◆ Recv() [5/10]

| MPI_Status monolish::mpi::comm::Recv | ( | monolish::vector< float > & | vec, |

| int | src, | ||

| int | tag | ||

| ) | const |

MPI_Recv for monolish::vector. Performs a blocking recv.

- Parameters

-

vec monolish::vector (size N) src rank of source tag message tag

- Returns

- MPI status object

- Warning

- MPI functions do not support GPUs. The user needs to send and receive data to and from the GPU before and after the MPI function.

◆ Recv() [6/10]

| MPI_Status monolish::mpi::comm::Recv | ( | size_t | val, |

| int | src, | ||

| int | tag | ||

| ) | const |

MPI_Recv for scalar. Performs a blocking recv.

- Parameters

-

val scalar value src rank of source tag message tag

- Returns

- MPI status object

◆ Recv() [7/10]

| MPI_Status monolish::mpi::comm::Recv | ( | std::vector< double > & | vec, |

| int | src, | ||

| int | tag | ||

| ) | const |

MPI_Recv for std::vector. Performs a blocking recv.

- Parameters

-

vec std::vector (size N) src rank of source tag message tag

- Returns

- MPI status object

◆ Recv() [8/10]

| MPI_Status monolish::mpi::comm::Recv | ( | std::vector< float > & | vec, |

| int | src, | ||

| int | tag | ||

| ) | const |

MPI_Recv for std::vector. Performs a blocking recv.

- Parameters

-

vec std::vector (size N) src rank of source tag message tag

- Returns

- MPI status object

◆ Recv() [9/10]

| MPI_Status monolish::mpi::comm::Recv | ( | std::vector< int > & | vec, |

| int | src, | ||

| int | tag | ||

| ) | const |

MPI_Recv for std::vector. Performs a blocking recv.

- Parameters

-

vec std::vector (size N) src rank of source tag message tag

- Returns

- MPI status object

◆ Recv() [10/10]

| MPI_Status monolish::mpi::comm::Recv | ( | std::vector< size_t > & | vec, |

| int | src, | ||

| int | tag | ||

| ) | const |

MPI_Recv for std::vector. Performs a blocking recv.

- Parameters

-

vec std::vector (size N) src rank of source tag message tag

- Returns

- MPI status object

◆ Scatter() [1/6]

| void monolish::mpi::comm::Scatter | ( | monolish::vector< double > & | sendvec, |

| monolish::vector< double > & | recvvec, | ||

| int | root | ||

| ) | const |

MPI_Scatter, Sends data from one task to all tasks. The data is evenly divided and transmitted to each process.

- Parameters

-

sendvec send data, monolish vector (size N) recvvec recv data, monolish vector (size N / # of procs) root root rank number

- Warning

- MPI functions do not support GPUs. The user needs to send and receive data to and from the GPU before and after the MPI function.

◆ Scatter() [2/6]

| void monolish::mpi::comm::Scatter | ( | monolish::vector< float > & | sendvec, |

| monolish::vector< float > & | recvvec, | ||

| int | root | ||

| ) | const |

MPI_Scatter, Sends data from one task to all tasks. The data is evenly divided and transmitted to each process.

- Parameters

-

sendvec send data, monolish vector (size N) recvvec recv data, monolish vector (size N / # of procs) root root rank number

- Warning

- MPI functions do not support GPUs. The user needs to send and receive data to and from the GPU before and after the MPI function.

◆ Scatter() [3/6]

| void monolish::mpi::comm::Scatter | ( | std::vector< double > & | sendvec, |

| std::vector< double > & | recvvec, | ||

| int | root | ||

| ) | const |

MPI_Scatter, Sends data from one task to all tasks. The data is evenly divided and transmitted to each process.

- Parameters

-

sendvec send data, std::vector (size N) recvvec recv data, std::vector (size N / # of procs) root root rank number

◆ Scatter() [4/6]

| void monolish::mpi::comm::Scatter | ( | std::vector< float > & | sendvec, |

| std::vector< float > & | recvvec, | ||

| int | root | ||

| ) | const |

MPI_Scatter, Sends data from one task to all tasks. The data is evenly divided and transmitted to each process.

- Parameters

-

sendvec send data, std::vector (size N) recvvec recv data, std::vector (size N / # of procs) root root rank number

◆ Scatter() [5/6]

| void monolish::mpi::comm::Scatter | ( | std::vector< int > & | sendvec, |

| std::vector< int > & | recvvec, | ||

| int | root | ||

| ) | const |

MPI_Scatter, Sends data from one task to all tasks. The data is evenly divided and transmitted to each process.

- Parameters

-

sendvec send data, std::vector (size N) recvvec recv data, std::vector (size N / # of procs) root root rank number

◆ Scatter() [6/6]

| void monolish::mpi::comm::Scatter | ( | std::vector< size_t > & | sendvec, |

| std::vector< size_t > & | recvvec, | ||

| int | root | ||

| ) | const |

MPI_Scatter, Sends data from one task to all tasks. The data is evenly divided and transmitted to each process.

- Parameters

-

sendvec send data, std::vector (size N) recvvec recv data, std::vector (size N / # of procs) root root rank number

◆ Send() [1/10]

| void monolish::mpi::comm::Send | ( | double | val, |

| int | dst, | ||

| int | tag | ||

| ) | const |

MPI_Send for scalar. Performs a blocking send.

- Parameters

-

val scalar value dst rank of destination tag message tag

◆ Send() [2/10]

| void monolish::mpi::comm::Send | ( | float | val, |

| int | dst, | ||

| int | tag | ||

| ) | const |

MPI_Send for scalar. Performs a blocking send.

- Parameters

-

val scalar value dst rank of destination tag message tag

◆ Send() [3/10]

| void monolish::mpi::comm::Send | ( | int | val, |

| int | dst, | ||

| int | tag | ||

| ) | const |

MPI_Send for scalar. Performs a blocking send.

- Parameters

-

val scalar value dst rank of destination tag message tag

◆ Send() [4/10]

| void monolish::mpi::comm::Send | ( | monolish::vector< double > & | vec, |

| int | dst, | ||

| int | tag | ||

| ) | const |

MPI_Send for monolish::vector. Performs a blocking send.

- Parameters

-

vec monolish::vector (size N) dst rank of destination tag message tag

- Warning

- MPI functions do not support GPUs. The user needs to send and receive data to and from the GPU before and after the MPI function.

◆ Send() [5/10]

| void monolish::mpi::comm::Send | ( | monolish::vector< float > & | vec, |

| int | dst, | ||

| int | tag | ||

| ) | const |

MPI_Send for monolish::vector. Performs a blocking send.

- Parameters

-

vec monolish::vector (size N) dst rank of destination tag message tag

- Warning

- MPI functions do not support GPUs. The user needs to send and receive data to and from the GPU before and after the MPI function.

◆ Send() [6/10]

| void monolish::mpi::comm::Send | ( | size_t | val, |

| int | dst, | ||

| int | tag | ||

| ) | const |

MPI_Send for scalar. Performs a blocking send.

- Parameters

-

val scalar value dst rank of destination tag message tag

◆ Send() [7/10]

| void monolish::mpi::comm::Send | ( | std::vector< double > & | vec, |

| int | dst, | ||

| int | tag | ||

| ) | const |

MPI_Send for std::vector. Performs a blocking send.

- Parameters

-

vec std::vector (size N) dst rank of destination tag message tag

◆ Send() [8/10]

| void monolish::mpi::comm::Send | ( | std::vector< float > & | vec, |

| int | dst, | ||

| int | tag | ||

| ) | const |

MPI_Send for std::vector. Performs a blocking send.

- Parameters

-

vec std::vector (size N) dst rank of destination tag message tag

◆ Send() [9/10]

| void monolish::mpi::comm::Send | ( | std::vector< int > & | vec, |

| int | dst, | ||

| int | tag | ||

| ) | const |

MPI_Send for std::vector. Performs a blocking send.

- Parameters

-

vec std::vector (size N) dst rank of destination tag message tag

◆ Send() [10/10]

| void monolish::mpi::comm::Send | ( | std::vector< size_t > & | vec, |

| int | dst, | ||

| int | tag | ||

| ) | const |

MPI_Send for std::vector. Performs a blocking send.

- Parameters

-

vec std::vector (size N) dst rank of destination tag message tag

◆ set_comm()

| void monolish::mpi::comm::set_comm | ( | MPI_Comm | external_comm | ) |

set communicator

◆ Waitall()

| void monolish::mpi::comm::Waitall | ( | ) |

Waits for all communications to complete.

Member Data Documentation

◆ my_comm

|

private |

MPI communicator, MPI_COMM_WORLD.

Definition at line 27 of file monolish_mpi_core.hpp.

◆ requests

|

private |

Definition at line 31 of file monolish_mpi_core.hpp.

The documentation for this class was generated from the following file: